Large language models (LLMs) are changing how press releases are discovered, cited, and amplified across AI-generated responses. LinkLabs distributes content across a massive network of 35,633 domains; however, it remains unclear which publishers achieve visibility, which platforms amplify citations most effectively, and how quality metrics, such as authority, positioning, and share of voice, vary.

SEO teams and researchers lack large-scale evidence connecting publisher networks to LLM citations. Understanding which domains and platforms drive meaningful exposure is critical for measuring brand presence inside AI ecosystems.

This study analyzes 2.67 million citations across 11,136 LinkLabs domains, collected between October 1 and December 24, 2025. Six major LLM platforms were evaluated: Copilot, Gemini, OpenAI, Perplexity, Grok, and Google AI Mode. The analysis focuses on domain-level coverage, platform penetration, top-performing publishers, and category-level trends.

The findings show that 31.3% of LinkLabs domains appear in LLM responses, with technology publishers leading visibility. Copilot delivers the highest quality citations, excelling in rank, authority, and share of voice. These insights establish a clear benchmark for understanding how large-scale press release distribution translates into AI-driven brand exposure.

Methodology – How Were LinkLabs LLM Citations Analyzed?

This analysis investigates the citation behavior of LLM platforms when referencing domains distributed via LinkLabs. The goal is to measure both frequency and quality of citations across 6 major LLM platforms: Copilot, Gemini, Google AI Mode, Grok, OpenAI, and Perplexity. Understanding these patterns provides insight into which domains achieve visibility within AI-generated outputs and how platform design influences the dynamics of citation.

The dataset comes from LLM response records spanning October 1 to December 24, 2025, covering over 179 million citations across more than 6 million unique domains. Each record includes the domain cited, platform name, timestamp, and associated metrics. Domains were standardized, and duplicate entries were consolidated to create a clean dataset suitable for both network-level and domain-level analysis.

The analysis framework focused on 5 key questions:

- What proportion of domains receive citations within LinkLabs?

- How does citation activity vary across LLM platforms, and which platforms deliver the highest quality citations?

- Which individual domains rank highest in visibility, authority, and share of voice?

- Which content categories demonstrate the strongest performance?

- How do overall network metrics compare to top-performing domains?

Key Takeaways: LinkLabs Impact on AI Citations

The analysis demonstrates that LinkLabs operates a high-volume distribution network with substantial impact on LLM citations. While only 31.3% of its 35,633 domains receive at least 1 citation, the absolute number, 11,136 cited domains, is significant, confirming broad reach across industries and topics.

Citation performance varies across platforms. Copilot achieves the strongest penetration and highest-quality citations, with 2.00% of its total citations from LinkLabs domains, top average rank (3.04), and highest citation score (58.69). Grok delivers the highest overall volume but lower quality metrics, while Gemini and Perplexity emphasize authoritative placement across ranks.

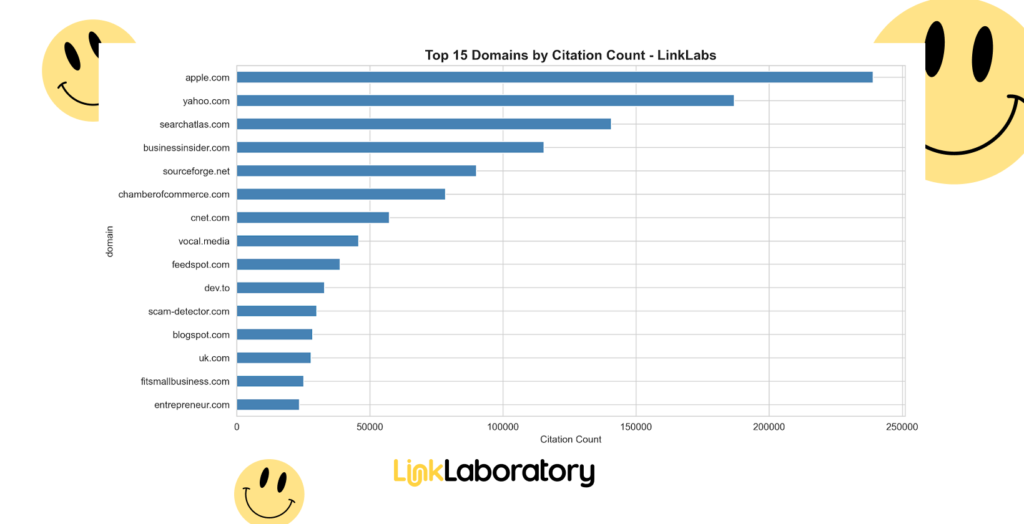

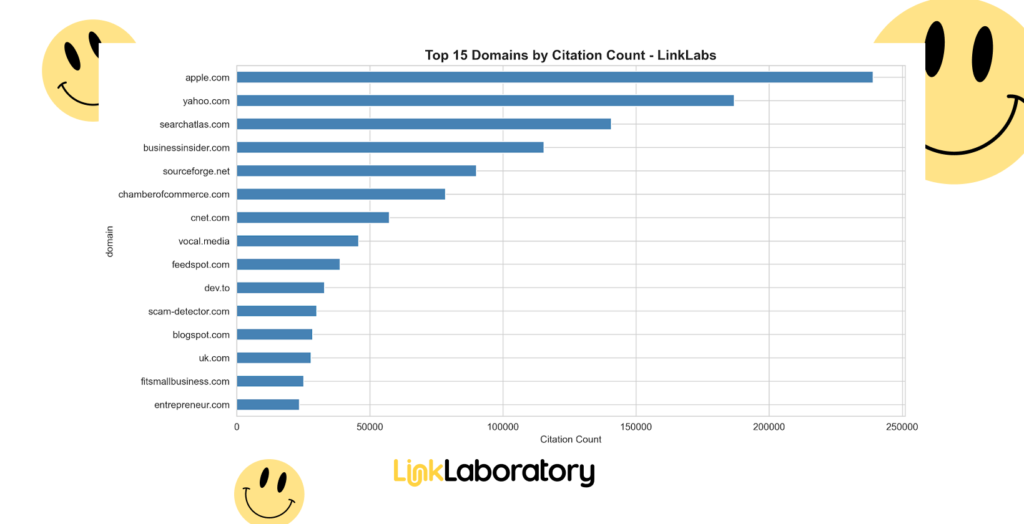

Top-performing domains fall across major technology and business sites. Apple.com leads with 239,076 citations, while searchatlas.com ranks third, excelling across rank, citation score, and share of voice. Category analysis confirms a tech-heavy focus, with 700 technology domains cited, nearly double the next largest category. Long-tail distribution across smaller, specialized categories highlights opportunities for targeted outreach.

Overall network metrics show moderate authority (average citation score 32.89), typical positioning around 11th rank, and a 9.27% share of voice. The top 15 domains outperform these averages, indicating that strategic selection of high-performing publishers can substantially improve overall citation performance.

LinkLabs’ scale, diversity, and platform reach make it a key driver of AI visibility, offering actionable guidance for optimizing publisher engagement across multiple LLM platforms.

How Do LinkLabs Domains Perform Across LLM Platforms?

I, Manick Bhan, together with the Search Atlas research team, analyzed 35,633 domains distributed through LinkLabs to measure how frequently they are cited across 6 major LLM platforms: Copilot, OpenAI, Perplexity, Grok, Gemini, and Google AI Mode. The analysis highlights how platform design and publisher scale influence AI citation coverage and visibility.

Citation Coverage Analysis

Citation coverage measures the share of LinkLabs domains appearing in at least one LLM response. This metric shows which publishers contribute to AI-generated content and where brand signals reach the AI ecosystem.

- Domains cited: 11,136

- Citation rate: 31.3%

- Domains not cited: 24,497 (68.7%)

LinkLabs operates a massive distribution network. Only 31% of domains are cited and the absolute number of cited domains is remarkable. These 11,136 domains represent the backbone of LinkLabs’ AI visibility, spanning diverse industries and categories. The 68.7% of uncited domains largely consist of lower-authority publishers, niche sites, regional outlets, or newer additions not yet indexed by LLMs.

Interpretation

Unlike smaller networks with high citation percentages, LinkLabs demonstrates that scale drives reach. The total number of cited domains provides extensive coverage across AI platforms, even if a smaller fraction of the network is cited. Prioritizing these high-performing domains can significantly improve AI-driven visibility and ROI.

Which LLM Platforms Drive the Strongest LinkLabs Citation Performance?

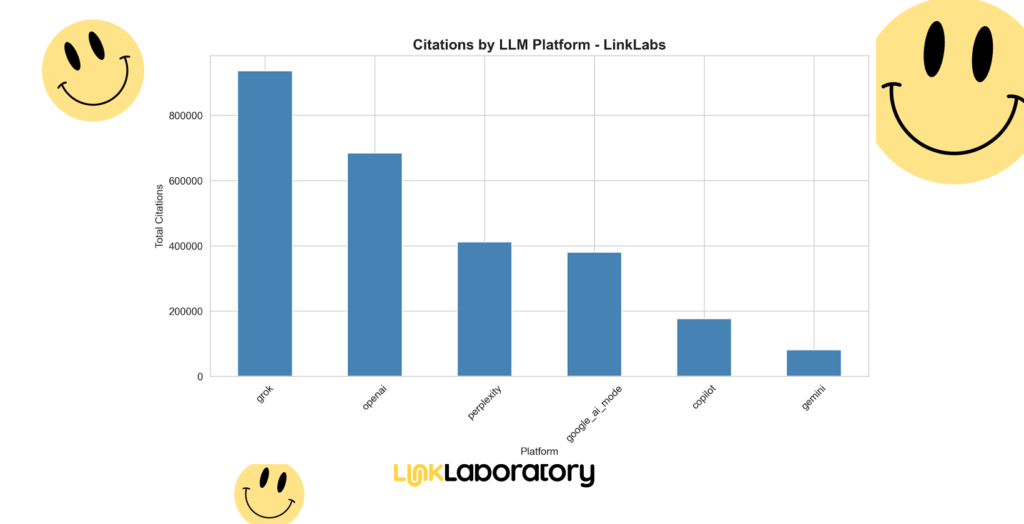

Platform-level performance matters because LLMs differ in how they retrieve, rank, and weight sources within generated responses. Measuring citations alongside rank, citation score, and share of voice reveals which platforms amplify LinkLabs visibility and which prioritize scale over quality.

Citation Volume vs. Citation Quality

The LinkLaboratory citation volume and quality per platform is below.

| platform | citation_count | total_mentions | avg_citation_score | avg_rank | avg_share_of_voice |

| grok | 935,889 | 1,173,738 | 15.81 | 21.82 | 2.70 |

| openai | 684,208 | 706,692 | 22.15 | 12.88 | 4.20 |

| perplexity | 412,417 | 530,315 | 45.21 | 5.84 | 9.87 |

| google_ai_mode | 380,314 | 480,846 | 26.69 | 8.44 | 7.90 |

| copilot | 176,873 | 494,266 | 55.69 | 3.17 | 21.84 |

| gemini | 81,011 | 118,747 | 55.87 | 3.32 | 25.47 |

Grok produces the highest absolute citation volume with 935,889 citations from 1,173,738 mentions, paired with a low average citation score of 15.81, an average rank of 21.82, and a 2.70% share of voice. This pattern reflects distribution breadth without positional influence or authority reinforcement.

OpenAI records 684,208 citations across 706,692 mentions, delivering a moderate average citation score of 22.15, an average rank of 12.88, and 4.20% share of voice.

Google AI Mode follows with 380,314 citations from 480,846 mentions, a 26.69 citation score, 8.44 average rank, and 7.90% share of voice, indicating stable but non-dominant placement.

Perplexity generates 412,417 citations from 530,315 mentions, achieving a high average citation score of 45.21, strong positioning at 5.84, and 9.87% share of voice.

Copilot delivers 176,873 citations across 494,266 mentions, producing a 55.69 citation score, an average rank of 3.17, and 21.84% share of voice, signaling selective but dominant placement.

Gemini records 81,011 citations from 118,747 mentions, matching Copilot-level authority with a 55.87 citation score, 3.32 average rank, and the highest share of voice at 25.47%.

Platform Penetration and LLM Citation Behavior

The LinkLaboratory penetration rates by platform are below.

| Platform | LinkLabs Citations | Total Platform Citations | LinkLabs as % | Avg Rank | Avg Citation Score |

| Copilot | 176,873 | 8,855,402 | 2.00% | 3.04 | 58.69 |

| OpenAI | 684,208 | 35,345,384 | 1.94% | 14.28 | 22.55 |

| Grok | 935,889 | 65,694,477 | 1.42% | 19.87 | 19.17 |

| Google AI Mode | 380,314 | 30,533,099 | 1.25% | 8.12 | 27.47 |

| Perplexity | 412,417 | 35,944,874 | 1.15% | 5.72 | 51.82 |

| Gemini | 81,011 | 7,963,142 | 1.02% | 3.56 | 52.60 |

Copilot records 176,873 LinkLabs citations out of 8,855,402 total platform citations, producing a 2.00% penetration rate, equivalent to 1 citation per fifty platform references. It delivers the strongest positioning with an average rank of 3.04 and the highest average citation score of 58.69, confirming sustained contextual relevance and platform-level affinity.

OpenAI generates 684,208 LinkLabs citations across 35,345,384 total citations, resulting in a 1.94% penetration rate with an average rank of 14.28 and a 22.55 citation score. This reflects broad inclusion with moderate authority reinforcement rather than consistent top-response prioritization.

Grok produces the highest absolute volume with 935,889 LinkLabs citations from 65,694,477 total citations, yet posts the lowest penetration at 1.42%. Its 19.87 average rank and 19.17 citation score confirm that scale-driven exposure does not translate into influence or share-of-voice control.

Google AI Mode delivers 380,314 LinkLabs citations out of 30,533,099 total citations, yielding a 1.25% penetration rate, an 8.12 average rank, and a 27.47 citation score. This indicates stable visibility with improved positioning relative to volume-first platforms.

Perplexity records 412,417 citations from 35,944,874 total citations, producing a 1.15% penetration rate, a strong 5.72 average rank, and a 51.82 citation score, signaling authority-weighted retrieval despite lower proportional inclusion.

Gemini shows 81,011 LinkLabs citations within 7,963,142 total citations, resulting in a 1.02% penetration rate, an average rank of 3.56, and a 52.60 citation score, reinforcing precision-driven placement and high-impact visibility over raw scale.

Which Domains Achieve the Strongest Citation Performance Across LLMs?

The top 15 LinkLaboratory domains and their citation count, total mentions, average ranking, citation score, and share of voice are below.

| domain | citation_count | total_mentions | avg_rank | avg_citation_score | avg_share_of_voice |

| apple.com | 239,076 | 303,247 | 9.02 | 40.14 | 13.49 |

| yahoo.com | 186,926 | 223,343 | 10.42 | 33.24 | 11.34 |

| searchatlas.com | 140,708 | 194,796 | 6.94 | 46.80 | 15.35 |

| businessinsider.com | 115,440 | 155,226 | 9.03 | 39.83 | 12.60 |

| sourceforge.net | 90,037 | 116,327 | 11.31 | 34.77 | 10.26 |

| chamberofcommerce.com | 78,464 | 138,548 | 7.86 | 41.49 | 16.09 |

| cnet.com | 57,405 | 77,444 | 9.27 | 36.81 | 11.51 |

| vocal.media | 45,834 | 56,278 | 12.05 | 27.93 | 6.07 |

| feedspot.com | 38,906 | 55,292 | 9.48 | 42.50 | 13.17 |

| dev.to | 33,026 | 43,182 | 10.28 | 36.14 | 11.04 |

| scam-detector.com | 30,072 | 31,498 | 10.69 | 35.98 | 11.49 |

| blogspot.com | 28,621 | 33,176 | 10.35 | 33.63 | 10.88 |

| uk.com | 27,952 | 34,532 | 9.19 | 36.42 | 10.87 |

| fitsmallbusiness.com | 25,207 | 42,111 | 9.23 | 39.68 | 12.68 |

| entrepreneur.com | 23,636 | 31,043 | 9.42 | 37.53 | 11.01 |

Evaluating domain-level performance clarifies how authority, brand recognition, and topical relevance influence visibility across large language model platforms. The results show clear separation between domains that benefit from scale and those that earn prominence through relevance and trust signals.

Authority Signals Among the Most Cited LinkLabs Domains

Apple.com, Yahoo.com, and CNET.com dominate raw citation volume within the LinkLabs network, which confirms inclusion of major consumer and technology brands across AI-generated responses.

Apple.com records 239,076 citations, Yahoo.com records 186,926 citations, and CNET.com ranks among the most frequently cited domains, which reflects scale-driven visibility supported by brand authority and broad topical coverage rather than preferential positioning.

SearchAtlas.com emerges as the strongest qualitative performer across all measured domains. It ranks third by citation volume with 140,708 citations, while achieving the highest average citation score at 46.80, the highest share of voice at 15.35%, and a strong average rank of 6.94, which indicates consistent authoritative placement driven by contextual relevance and trust signals rather than passive inclusion.

Platform Influence on Domain-Level Citation Outcomes

The best domains and their best citation count, mentions, rank, citation score, and share of voice by platform are below.

| domain | best_citation_count | best_mentions | best_rank | best_citation_score | best_share_of_voice |

| apple.com | grok | grok | copilot | copilot | gemini |

| yahoo.com | grok | grok | gemini | gemini | gemini |

| searchatlas.com | grok | grok | copilot | copilot | copilot |

| businessinsider.com | grok | grok | gemini | gemini | gemini |

| sourceforge.net | grok | grok | gemini | gemini | gemini |

| chamberofcommerce.com | openai | copilot | gemini | gemini | gemini |

| cnet.com | grok | grok | copilot | perplexity | gemini |

| vocal.media | google_ai_mode | google_ai_mode | perplexity | perplexity | perplexity |

| feedspot.com | grok | grok | gemini | gemini | gemini |

| dev.to | grok | grok | copilot | copilot | copilot |

| scam-detector.com | openai | openai | copilot | google_ai_mode | copilot |

| blogspot.com | grok | grok | gemini | gemini | gemini |

| uk.com | grok | grok | copilot | copilot | copilot |

| fitsmallbusiness.com | grok | copilot | copilot | copilot | copilot |

| entrepreneur.com | grok | grok | copilot | copilot | copilot |

Grok accounts for the majority of citation volume across 10 of the top 15 cited domains, which reinforces its role as a scale-oriented retrieval system.

Gemini and Copilot consistently outperform on quality metrics, delivering stronger average ranks, higher citation scores, and elevated share of voice, which demonstrates that platform design materially influences whether domains achieve prominence rather than simple inclusion within AI-generated responses.

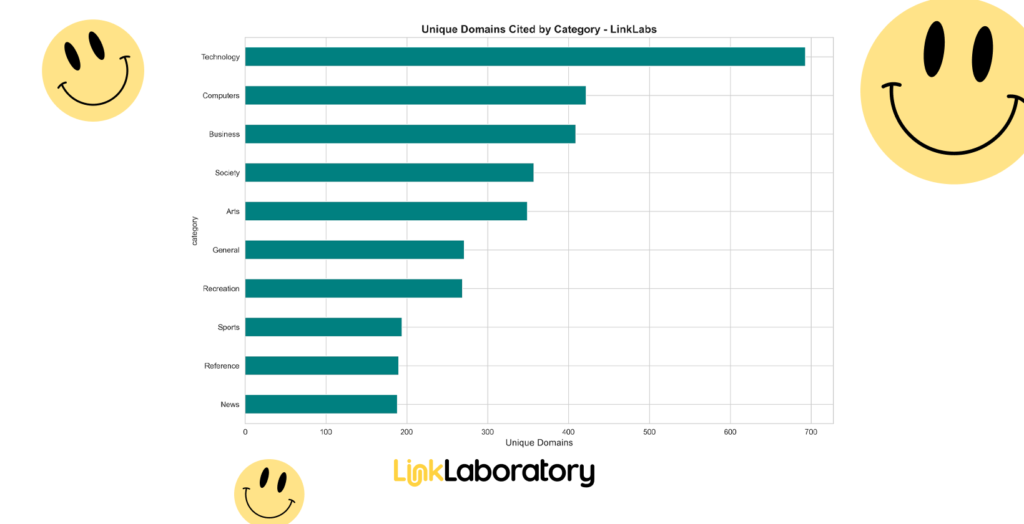

Which Distribution Categories Drive the Most LLM Citations?

The best performed category, their unique domains and percentage of cited domains are below.

| Rank | Category | Unique Domains (Approx.) | % of Cited Domains |

| 1 | Technology | ~700 | 6.3% |

| 2 | Computers | ~420 | 3.8% |

| 3 | Business | ~400 | 3.6% |

| 4 | Society | ~350 | 3.1% |

| 5 | Arts | ~330 | 3.0% |

| 6 | General | ~270 | 2.4% |

| 7 | Recreation | ~265 | 2.4% |

| 8 | Sports | ~195 | 1.8% |

| 9 | Reference | ~190 | 1.7% |

| 10 | News | ~190 | 1.7% |

Category-level performance reveals which verticals deliver the most citations, highlighting where topical authority, publisher density, and LLM preference intersect. This perspective helps identify not just the largest categories, but the ones with measurable influence on AI-driven content discovery.

Technology and Business Lead in Citation Volume

Technology dominates category-level visibility with approximately 700 cited domains, representing 6.3% of all LinkLabs domains appearing in LLM citations. This count is nearly 2 times larger than the next highest category, reinforcing the concentration of AI visibility within technical subject matter.

Computers and Business follow with approximately 420 and 400 cited domains, respectively. Combined with Technology, these 3 categories account for roughly 1,520 unique domains, confirming that B2B, software, and commercial topics receive preferential treatment in AI-generated responses.

Niche Categories Contribute Broad Topical Reach

Beyond the top categories, citation distribution extends across a long tail of specialized verticals. The top 10 categories include approximately 3,310 domains, while the remaining 70% of cited domains are distributed across niche categories, enabling broad topical coverage while preserving a core concentration in high-impact technical and business segments.

How Strong Is LinkLabs’ Network Performance Across LLMs?

Evaluating overall quality metrics shows how LinkLabs domains perform collectively in AI citations. These metrics capture positioning, authority, and visibility across cited domains, establishing baseline network performance and identifying optimization opportunities.

The LinkLaboratory’s network-wide performance and performance benchmarking are below.

| Metric | Value | Top 15 Average | Gap | Top Performer | Interpretation |

| Average Rank | 11.04 | 9.63 | -1.41 | 6.94 | Typically appears around 11th position |

| Average Citation Score | 32.89 | 37.85 | +4.96 | 46.80 | Moderate authority (0-100 scale) |

| Average Share of Voice | 9.27% | 11.98% | +2.71% | 16.09% | Captures approximately 9% of competitive attention |

Network-Wide Performance Metrics

Across the LinkLabs network, domains typically appear around the 11th position in LLM citations, with an average rank of 11.04. The average citation score is 32.89 on a 0 to 100 scale, indicating moderate authority in AI responses.

Share of voice averages 9.27%, meaning LinkLabs domains capture roughly one-tenth of competitive attention across analyzed platforms. These values confirm consistent network contribution, with variation across publisher tiers.

Top 15 Domains Outperform the Network

Benchmarking the top 15 performing domains highlights the impact of publisher selection. These domains achieve an average rank of 9.63, citation score 37.85, and share of voice 11.98%, all above network averages. The leading domain reaches rank 6.94, citation score 46.80, and share of voice 16.09%.

This confirms that a small group of high-authority domains drives disproportionate visibility. Prioritizing these publishers provides a clear path to increasing network impact and ROI from LLM citations.

How Should Businesses Leverage LinkLabs Citation Insights?

Treat LLM citation performance as a primary visibility KPI and operationalize continuous measurement across Copilot, Grok, OpenAI, Gemini, Perplexity, and Google AI Mode. Quantify citation frequency, rank position, and authority signals to detect pre-impression brand exposure within AI-generated responses.

Prioritize categories and domains with demonstrated citation elasticity and reallocate resources toward Technology, Computers, and Business to maximize yield efficiency. Concentrate production on LLM-favored publishers to compound visibility gains and accelerate return on content investment.

Engineer content for topical authority and semantic determinism by enforcing narrow scope, factual density, and schema-aligned structure. Optimize for high-quality citation contexts by matching platform-specific retrieval patterns and response synthesis constraints.

Benchmark platform performance using penetration rate, average rank, and citation score to identify distribution asymmetries and optimization gaps. Balance scale from volume-centric engines with quality-dominant platforms to stabilize exposure across heterogeneous LLM behaviors.

Integrate citation metrics into a unified analytics layer alongside search performance to enable cross-channel optimization. Operationalize AI visibility governance by linking content execution, category focus, and platform targeting to measurable authority signals.

What Are the Study’s Limitations?

Every study has boundaries. The limitations of the LinkLabs analysis are listed below.

- Domain Coverage. Not all publisher domains were cited. While 11,136 of 35,633 LinkLabs domains (31.3%) appeared in at least 1 LLM citation, 68.7% remained uncited. This uneven coverage influences conclusions about category performance and platform effectiveness.

- Platform Variability. LLM platforms differ in design, retrieval capabilities, and content synthesis. Copilot, Gemini, and Perplexity emphasize high-quality citations, while Grok prioritizes volume. These differences affect penetration rates, citation scores, and average positioning, limiting direct cross-platform comparability.

- Temporal Scope. Data collection spanned 3 months (October 1 to December 24, 2025). This period captures a strong snapshot of AI citation trends but may not reflect longer-term shifts, seasonal variations, or emerging platform updates.

- Publisher Type and Authority. LinkLabs includes a mix of high-authority, mid-tier, and niche publishers. Lower-authority and regional domains may appear less frequently due to limited indexing or lower relevance, potentially skewing category and top-domain analyses toward larger or tech-focused sites.

Despite these limits, the study establishes a clear baseline for understanding how LinkLabs domains perform across LLM platforms. Future research should expand the timeframe, incorporate additional publishers, and explore longitudinal trends to capture evolving patterns of AI citation, platform preference, and category influence.